|

Jonathan Richard Schwarz

I'm a Visiting Professor at Imperial College London and the Head of AI Research at Thomson Reuters (TR), leading TR's Foundational Research Team. In addition, I serve as an Expert Advisor to the UK's AI Security Institute .

I joined TR through the acquisition of Safe Sign Technologies, for which I served as Co-Founder and Chief Scientific Officer (CSO).

Previously, I was also a Research Fellow at Harvard University and a Senior Research Scientist at Google DeepMind. I obtained my PhD from the joint DeepMind-University College London programme, advised by Yee Whye Teh and Peter Latham. My Thesis

focused on sparse parameterisations and knowledge transfer for efficient Machine Learning. Before that, I spent two years at the Gatsby Computational Neuroscience Unit and graduated top-of-the class from The University of Edinburgh.

My research focuses on the objective of building (i) efficient, (ii) general and (iii) robust Machine Learning systems. A central paradigm in my approach is the design of algorithms that can effectively abstract knowledge and skills present in related problems, enabling their utilisation for efficient learning on future tasks. In this way, agents gradually build diverse repertoires of skills, allowing transfer to future tasks using only a fraction of the otherwise required learning time and/or data.

|

|

News

Research

Selected papers are highlighted.

|

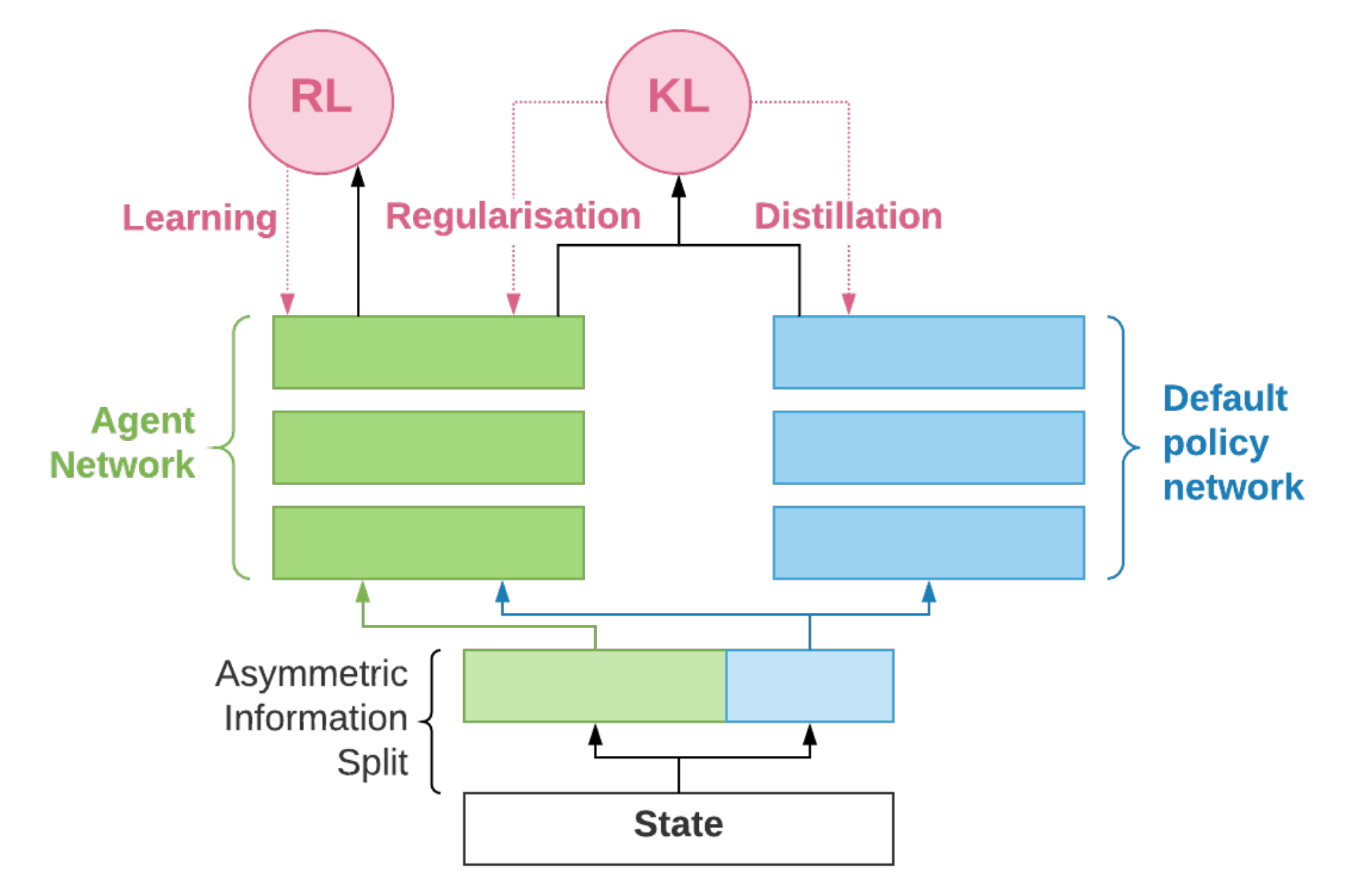

Behavior Priors for Efficient Reinforcement Learning

Dhruva Tirumala, Alexandre Galashov, Hyeonwoo Noh, Leonard Hasenclever, Razvan Pascanu, Jonathan Richard Schwarz, Guillaume Desjardins, Wojciech Marian Czarnecki, Arun Ahuja, Yee Whye Teh, Nicolas Heess

Journal of Machine Learning Research (JMLR) 2022

|

|

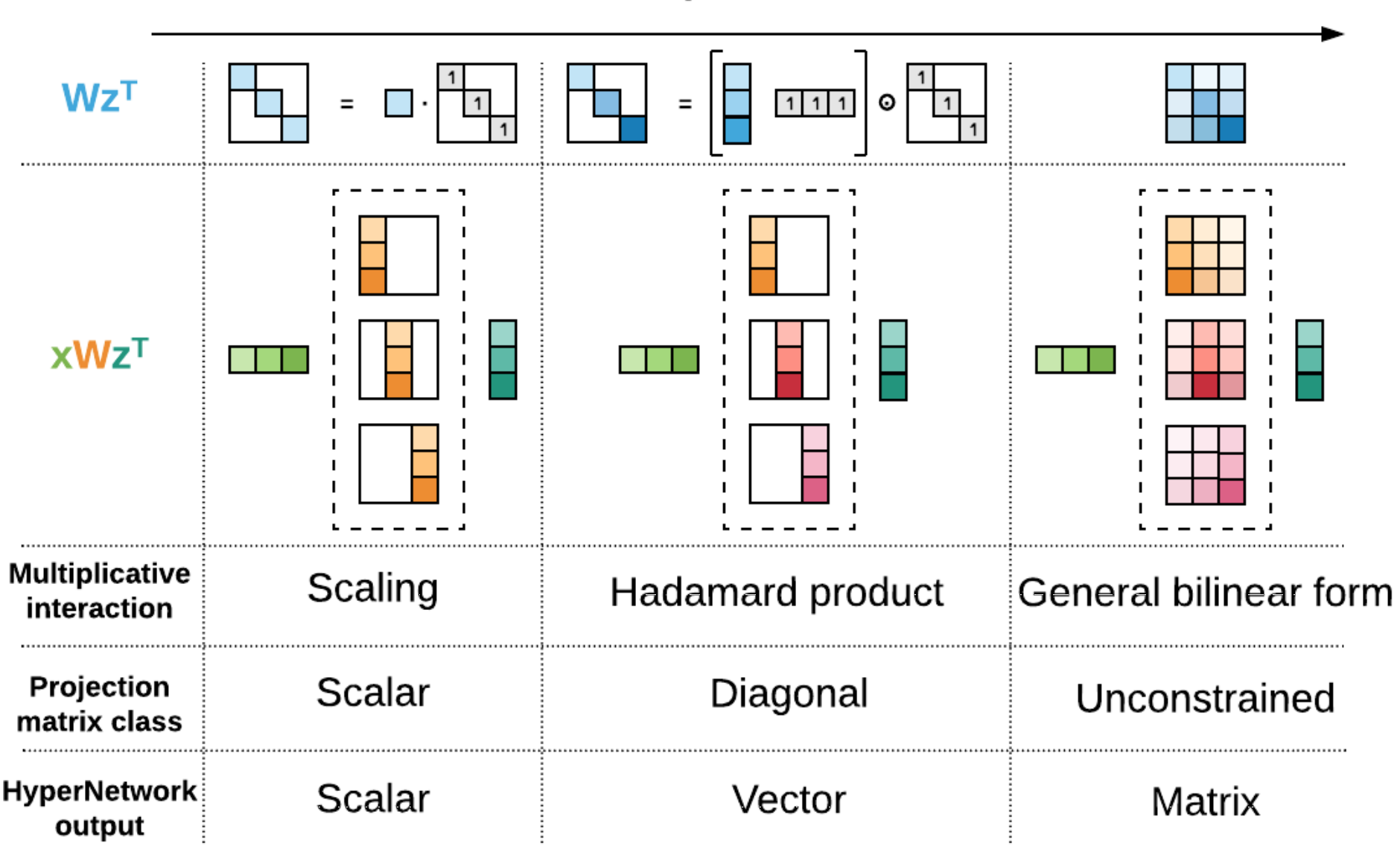

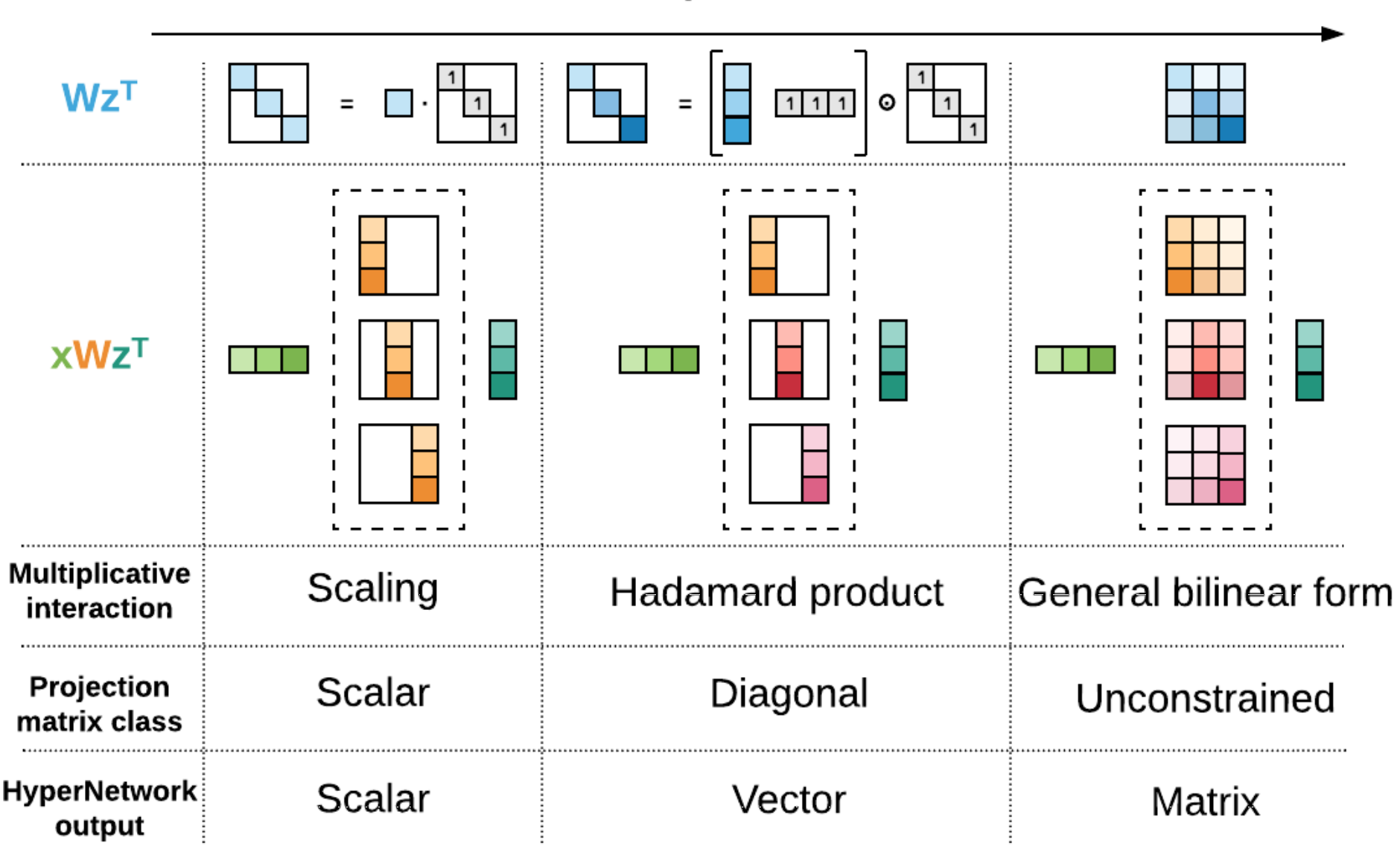

Multiplicative Interactions and Where to Find Them

Siddhant M. Jayakumar, Wojciech M. Czarnecki, Jacob Menick, Jonathan Richard Schwarz, Jack Rae, Simon Osindero, Yee Whye Teh, Tim Harley, Razvan Pascanu

International Conference on Learning Representations (ICLR) 2020

|

|

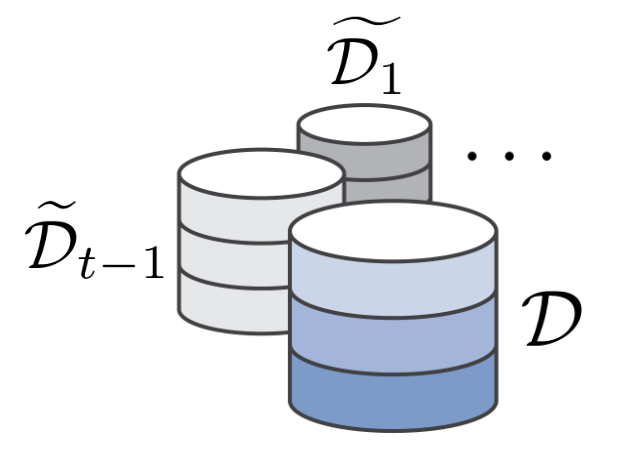

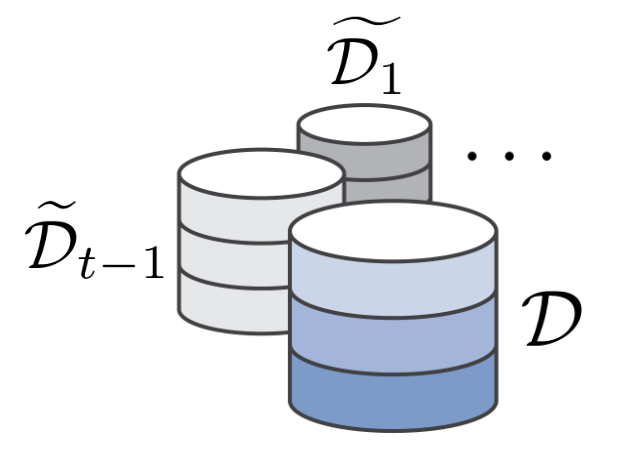

Experience replay for continual learning

David Rolnick, Arun Ahuja, Jonathan Richard Schwarz, Timothy P. Lillicrap, Greg Wayne

Neural Information Processing Systems (NeurIPS) 2019

|

|

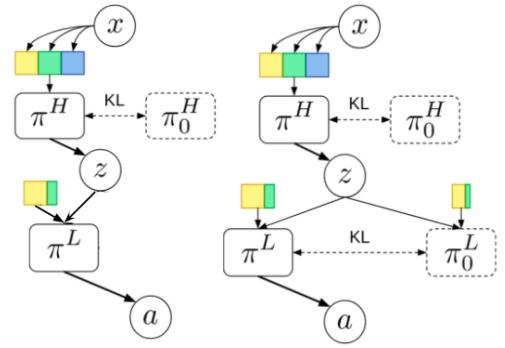

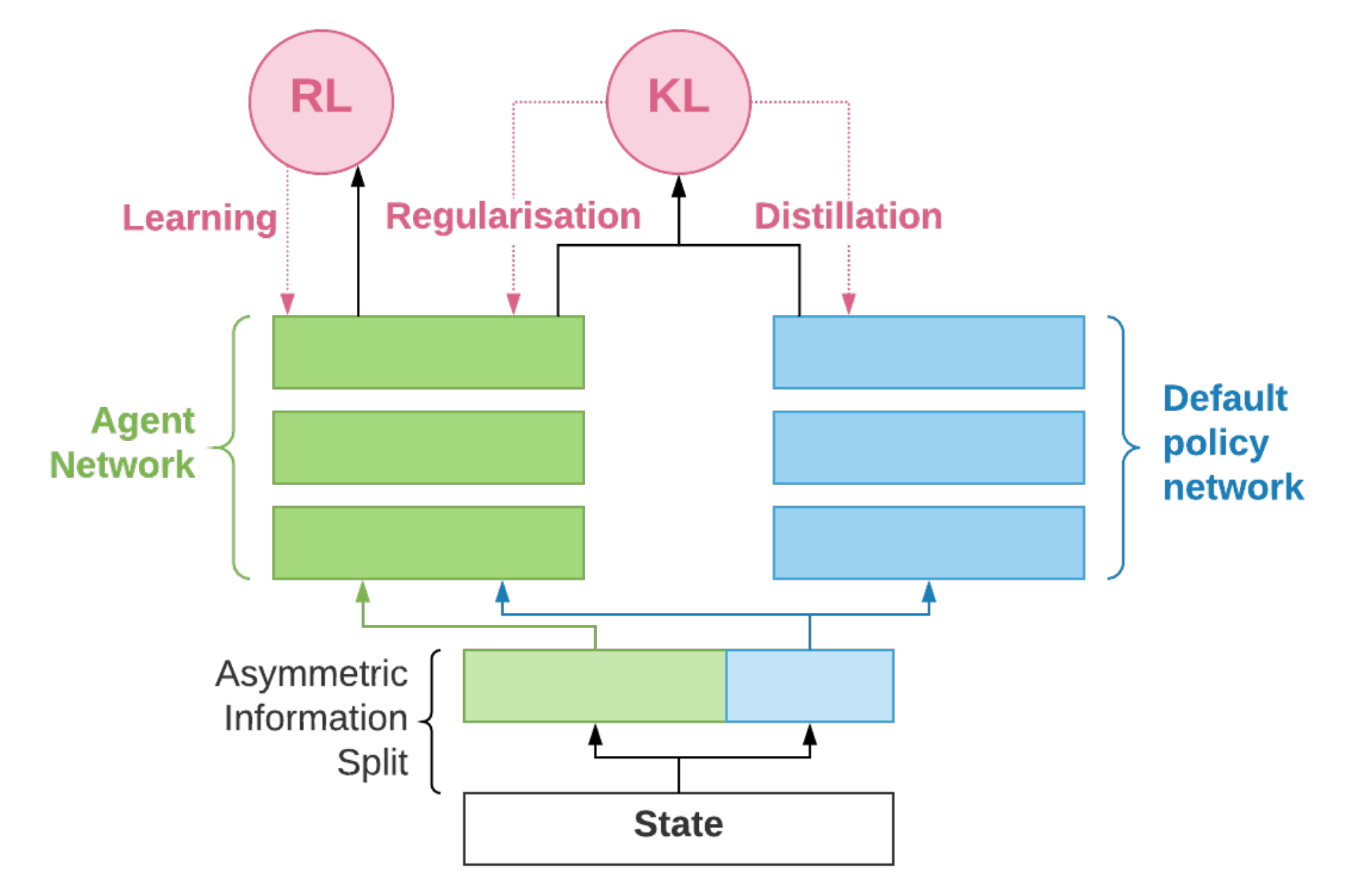

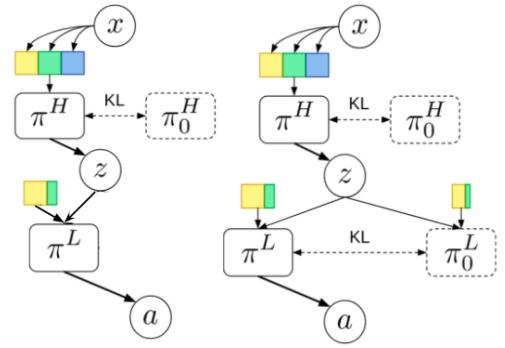

Information asymmetry in KL-regularized RL

Alexandre Galashov, Siddhant M Jayakumar, Leonard Hasenclever, Dhruva Tirumala, Jonathan Richard Schwarz, Guillaume Desjardins, Wojciech M Czarnecki, Yee Whye Teh, Razvan Pascanu, Nicolas Heess

International Conference on Learning Representations (ICLR) 2019

|

|

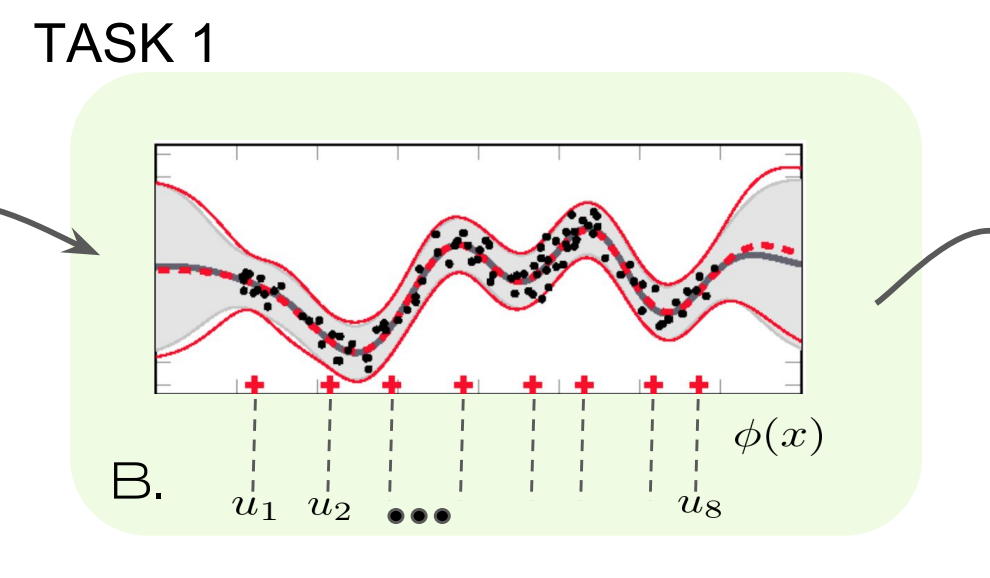

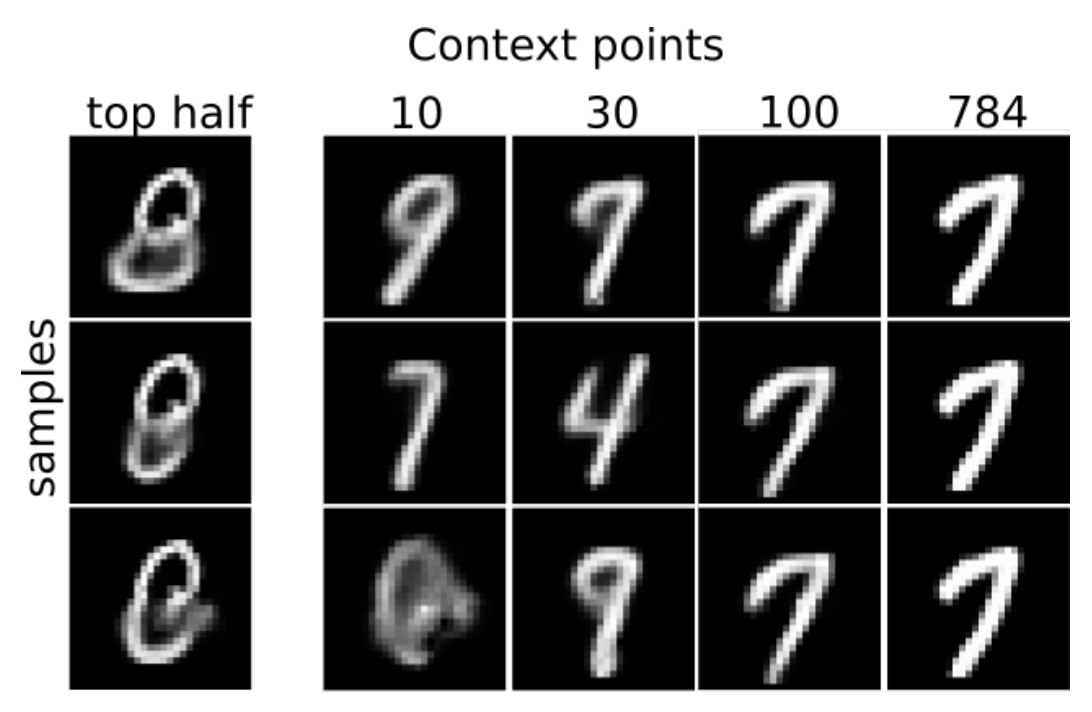

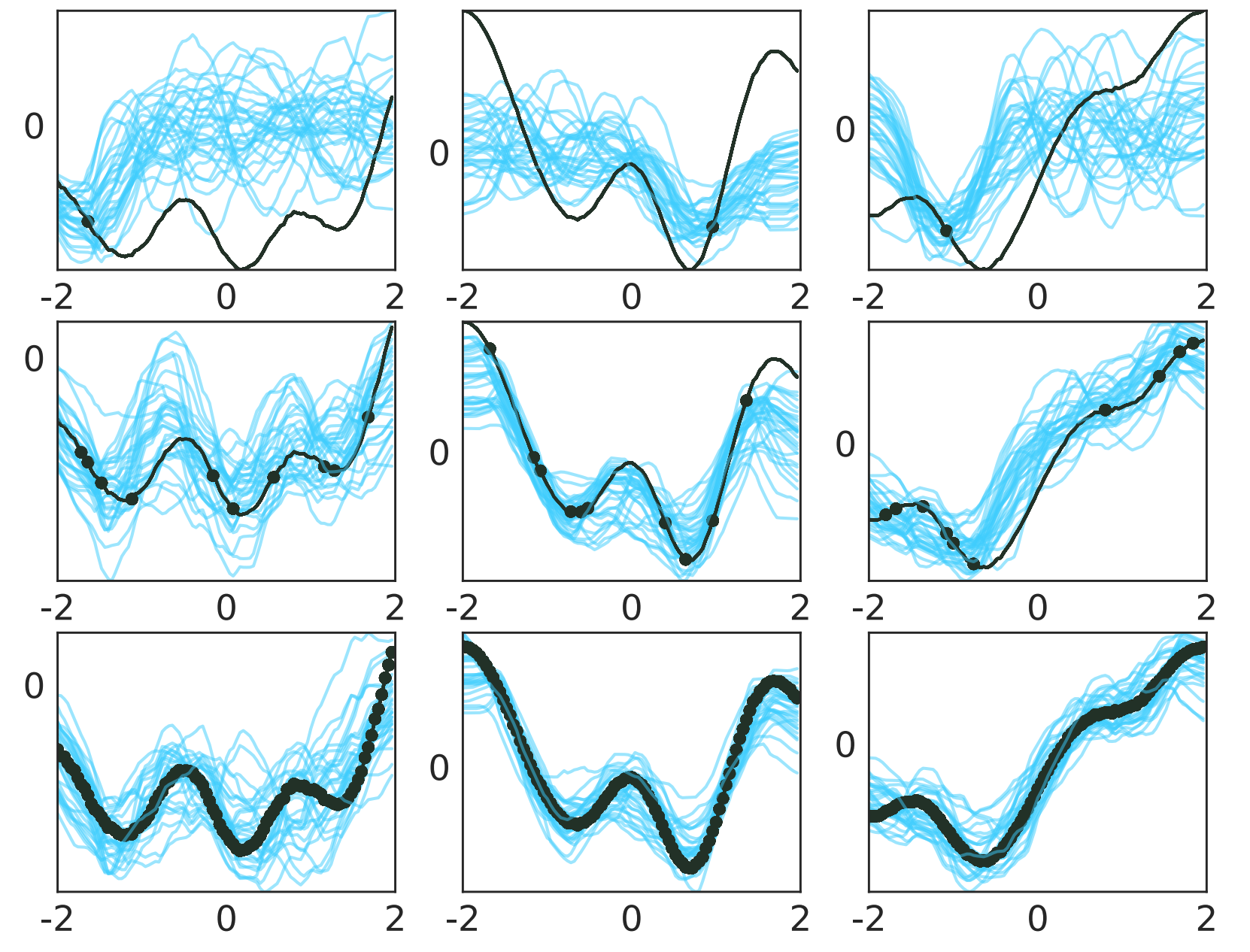

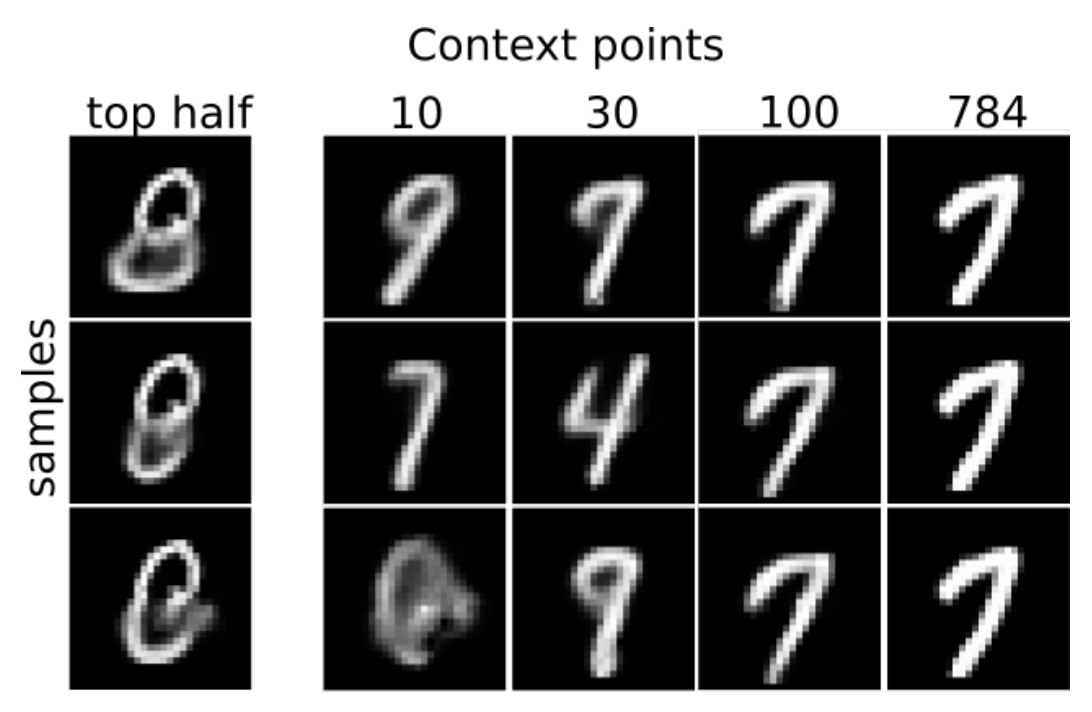

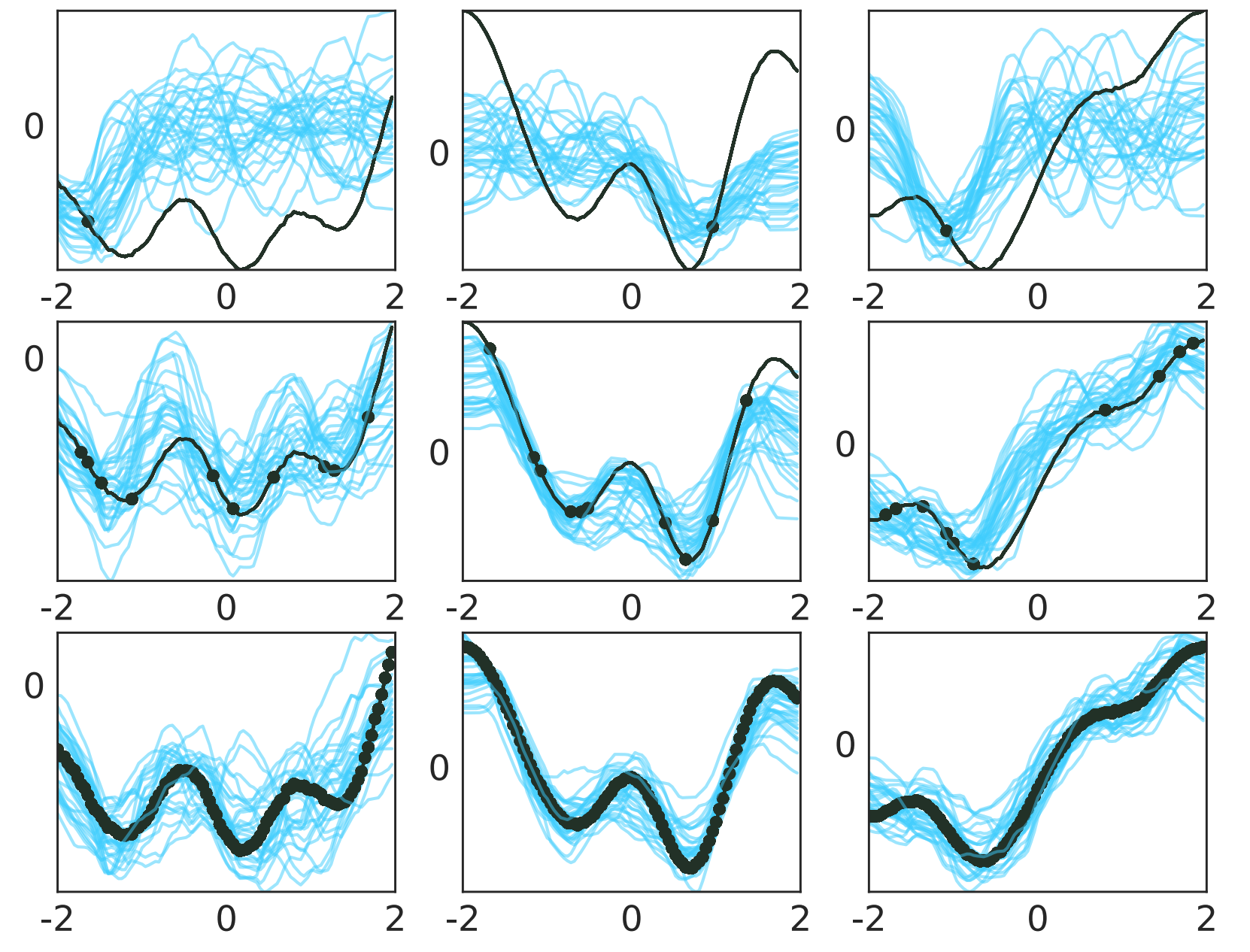

Empirical Evaluation of Neural Process Objectives

Tuan Anh Le, Hyunjik Kim, Marta Garnelo, Dan Rosenbaum, Jonathan Richard Schwarz, Yee Whye Teh

NeurIPS 2018 workshop on Bayesian Deep Learning

|

|

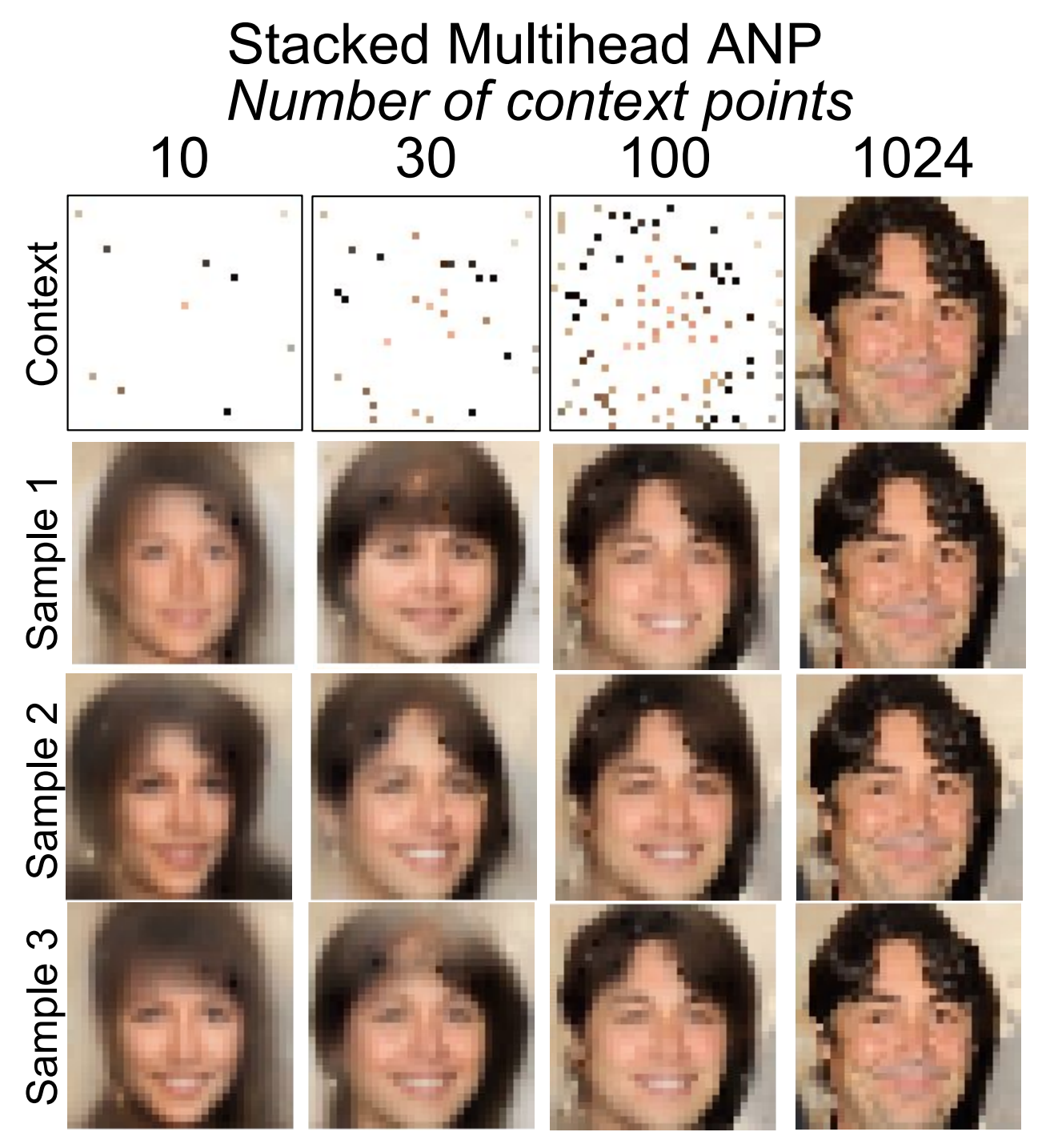

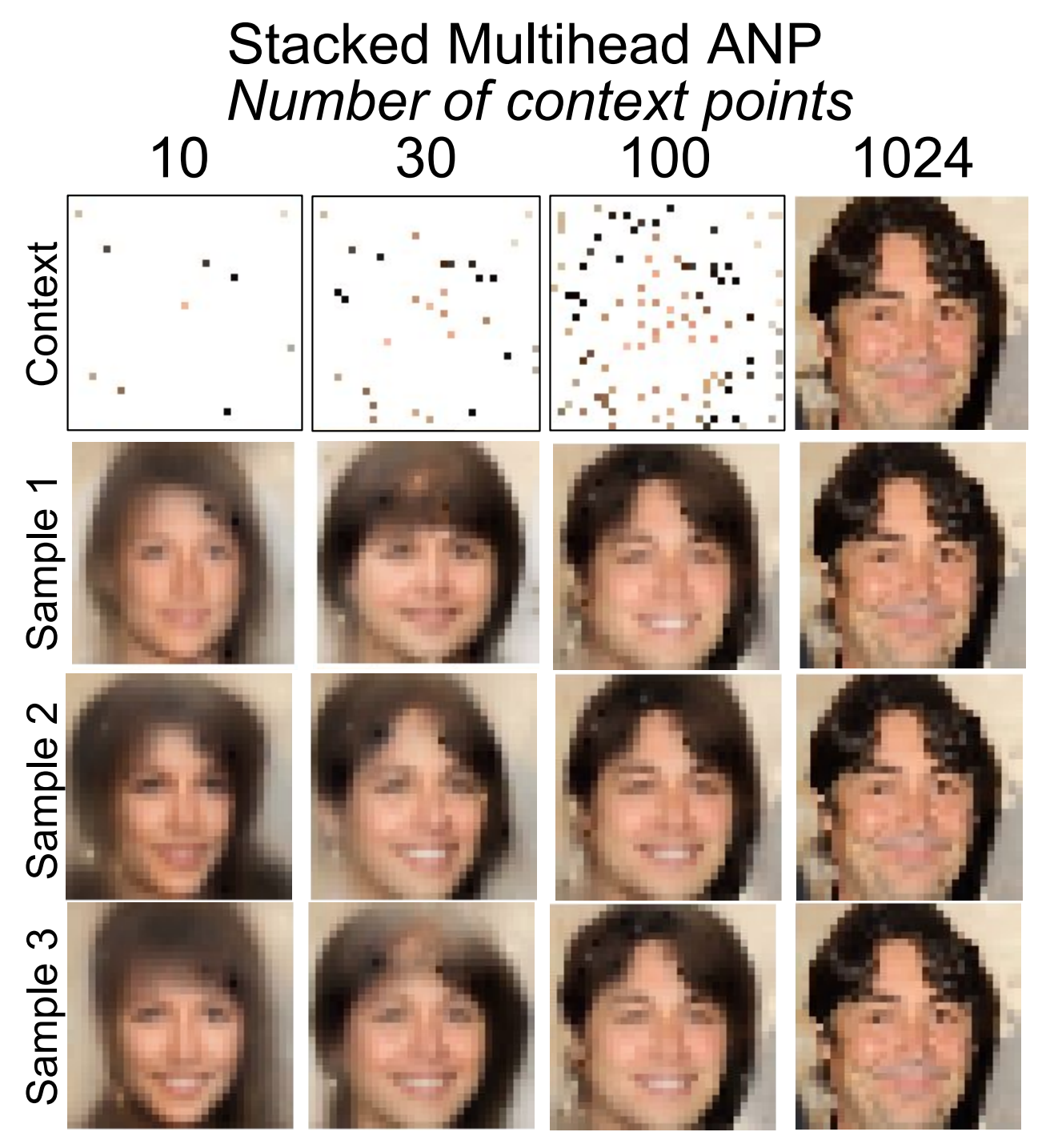

Attentive Neural Processes

Hyunjik Kim, Andriy Mnih, Jonathan Richard Schwarz, Marta Garnelo, SM Ali Eslami, Dan Rosenbaum, Oriol Vinyals, Yee Whye Teh

International Conference on Learning Representations (ICLR) 2019

💻 Code

|

|

Neural Processes

Marta Garnelo, Jonathan Richard Schwarz, Dan Rosenbaum, Fabio Viola, Danilo J Rezende, SM Eslami, Yee Whye Teh

ICML 2018 Workshop on Theoretical Foundations and Applications of Deep Generative Models (Spotlight talk)

🗣️ Talk (credit to Marta)

💻 Code

|

|

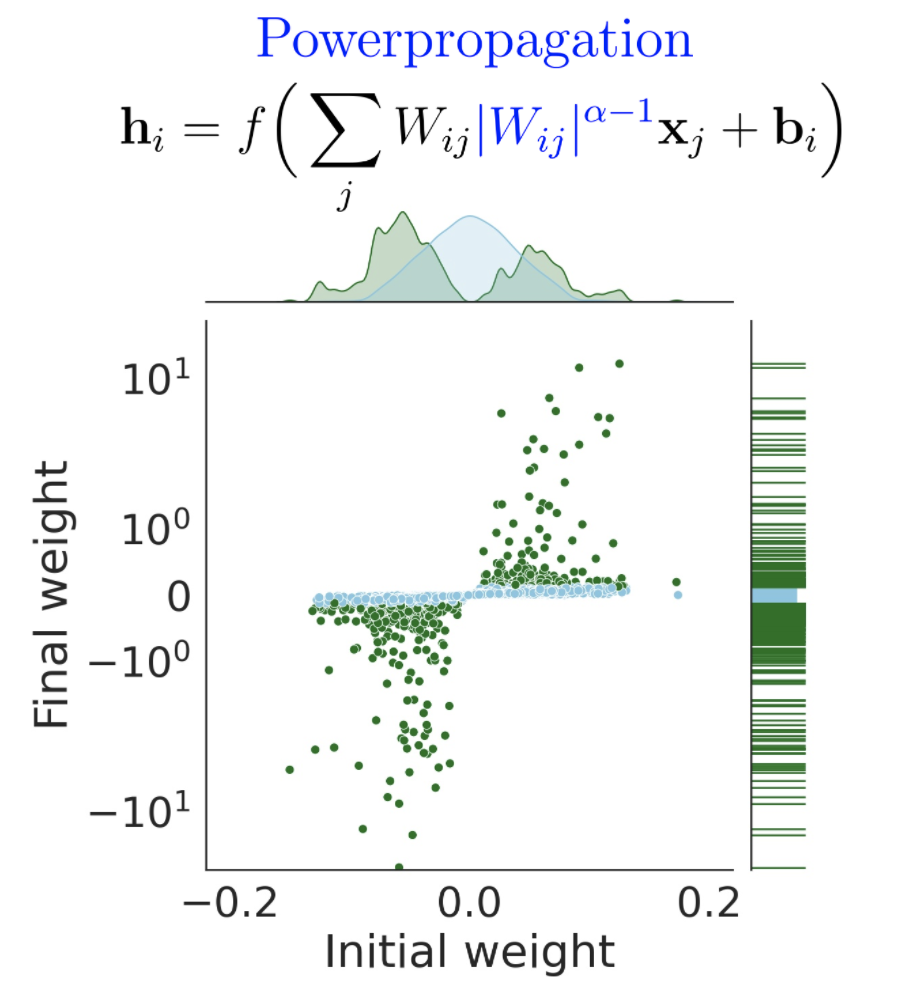

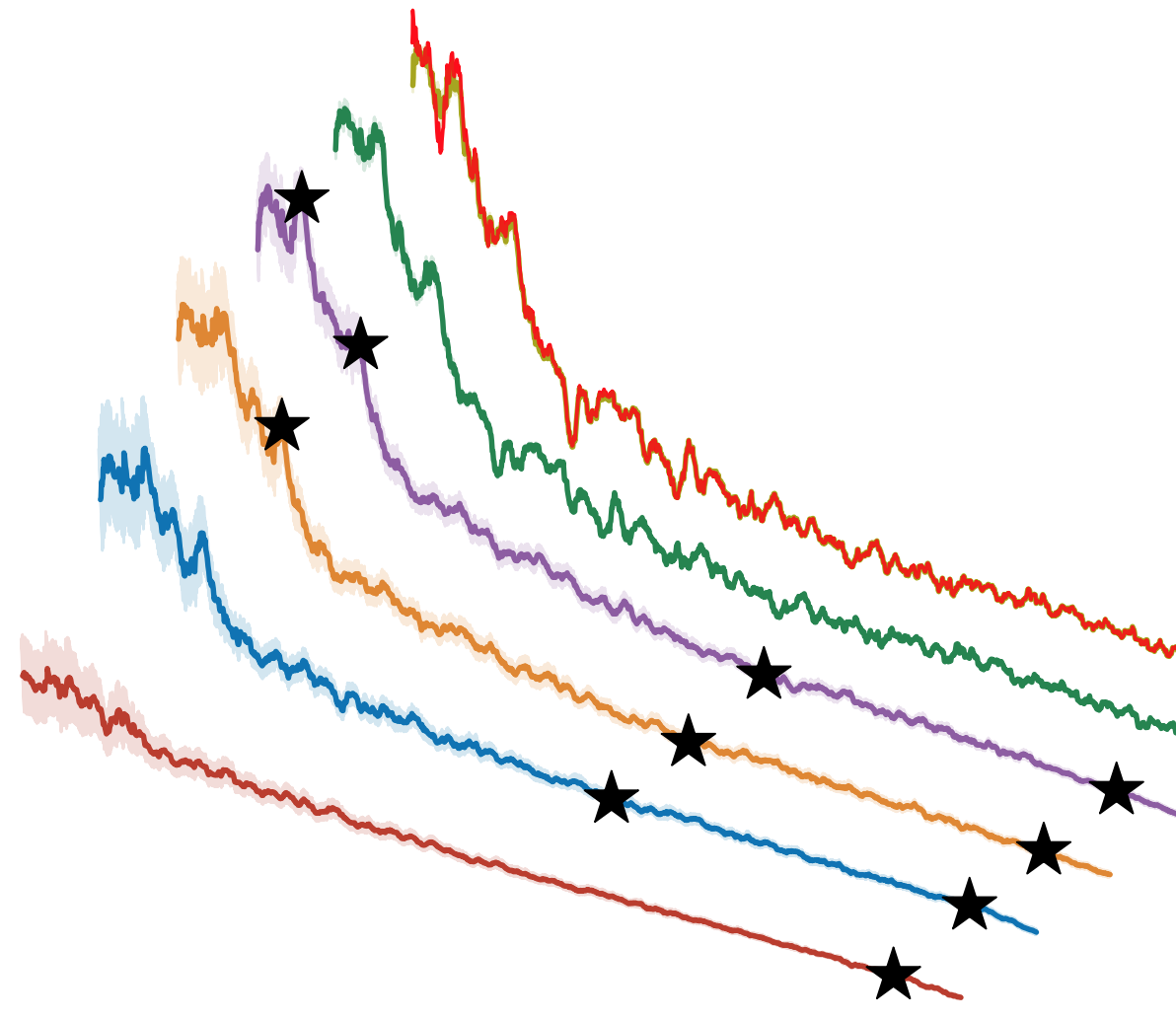

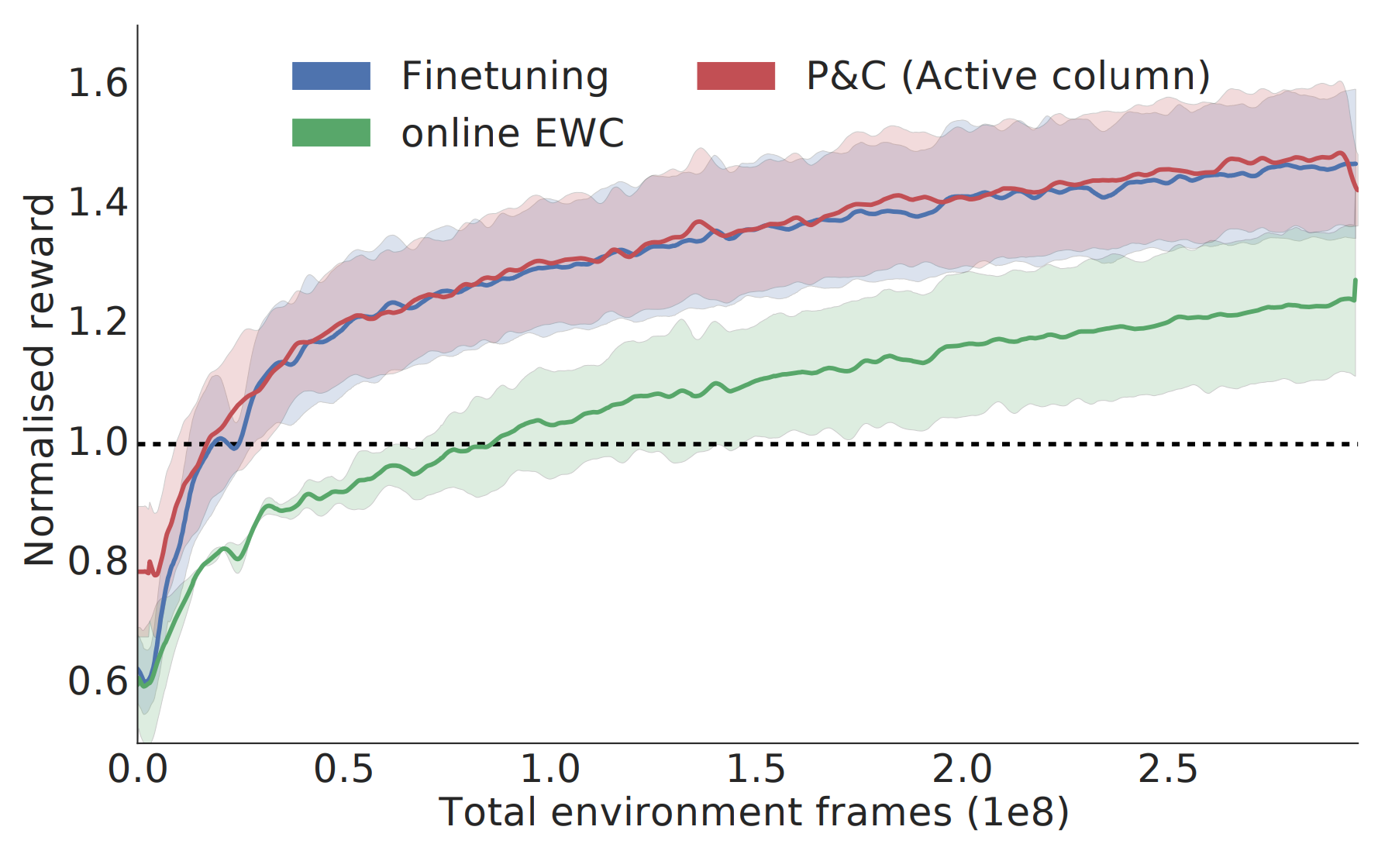

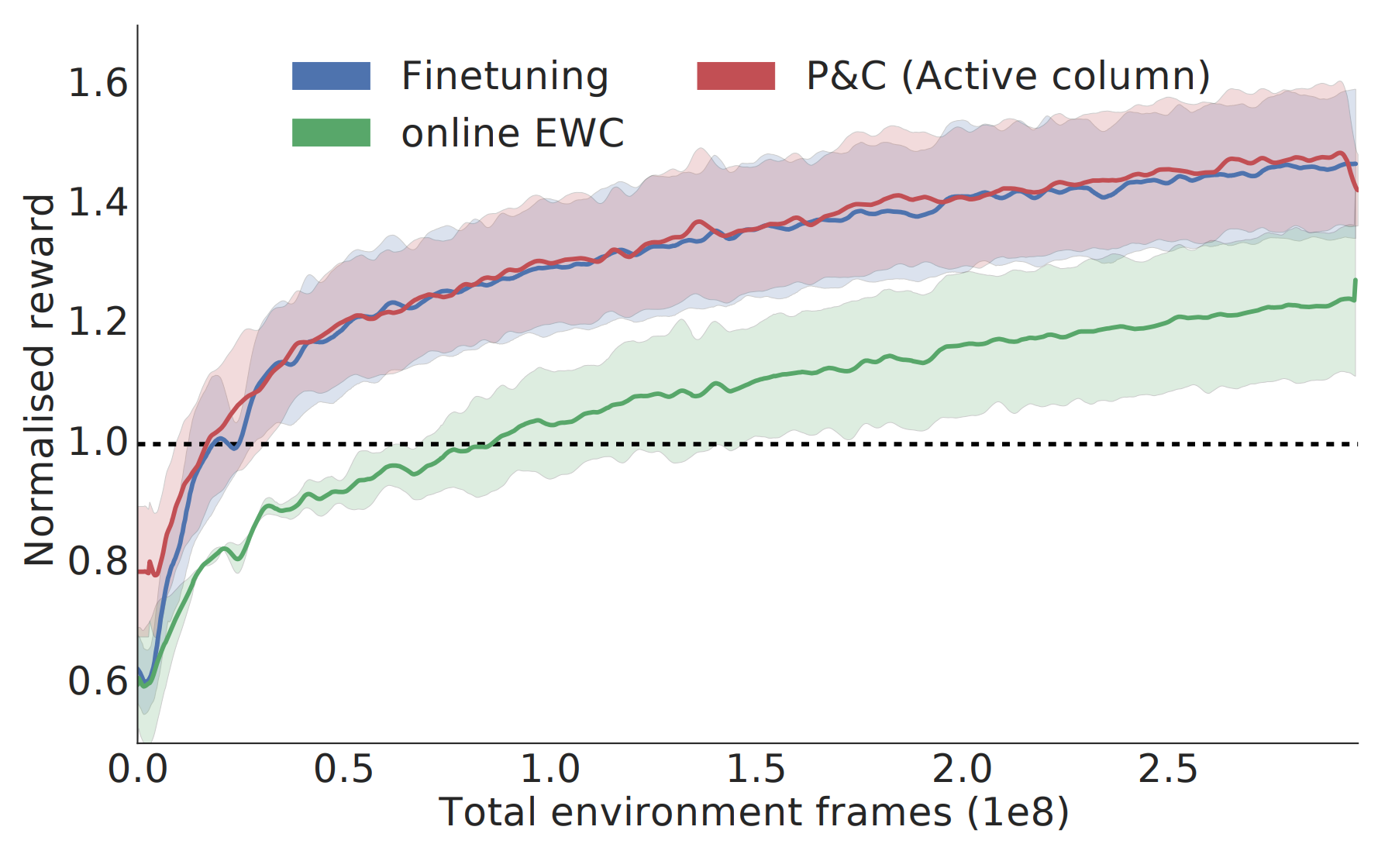

Progress & Compress: A scalable framework for continual learning

Jonathan Richard Schwarz, Jelena Luketina, Wojciech M. Czarnecki, Agnieszka Grabska-Barwinska, Yee Whye Teh, Raia Hadsell°, Razvan Pascanu°

International Conference on Machine Learning (ICML) 2018 (Long oral)

🗣️ Talk

📊 Data (Sequential Omniglot)

° : Joint senior authorship

|

|

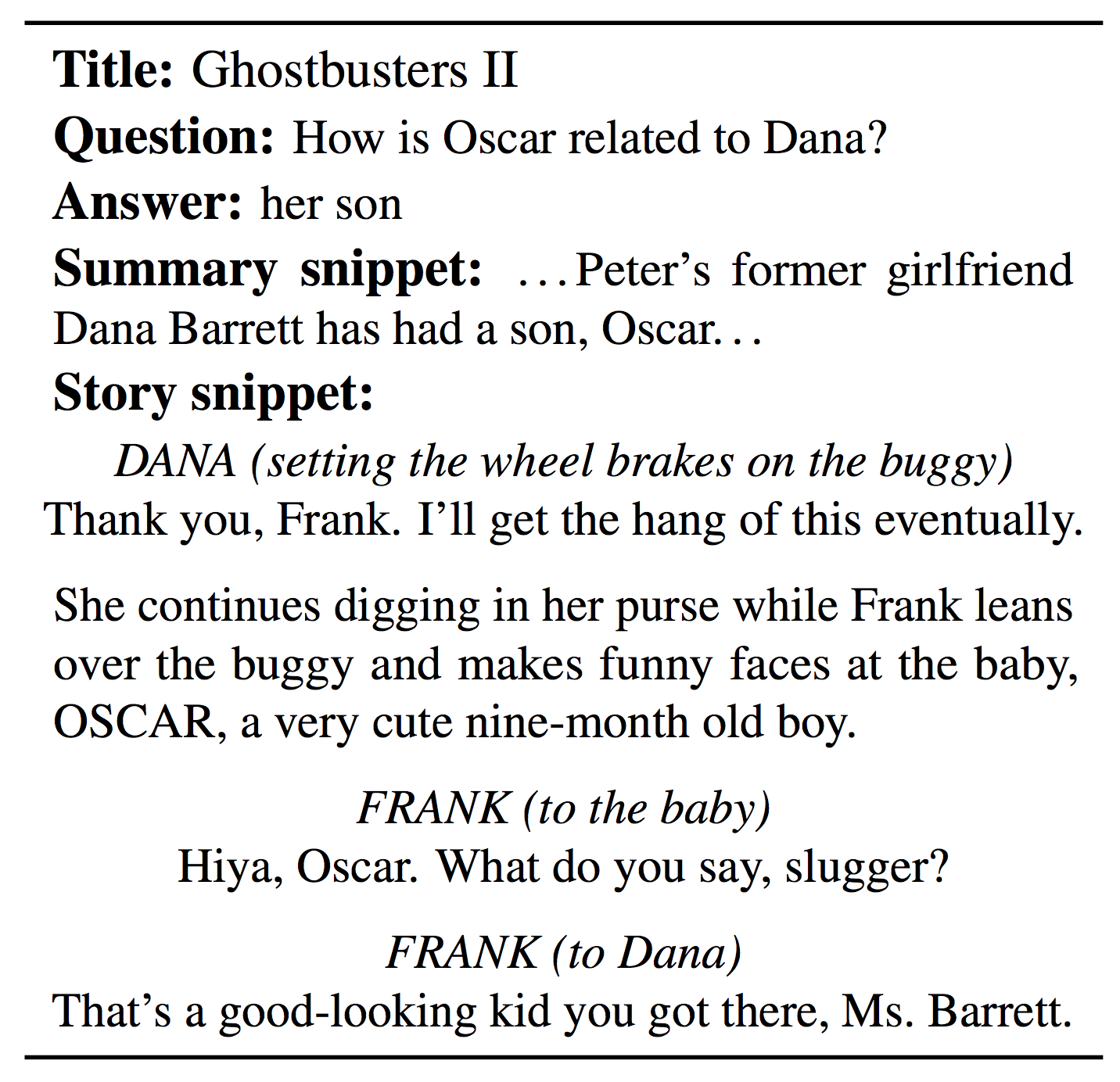

The NarrativeQA Reading Comprehension Challenge

Tomas Kocisky, Jonathan Richard Schwarz, Phil Blunsom, Chris Dyer, Karl Moritz Hermann, Gabor Melis, Edward Grefenstette

🗣️ Talk (credit to Tomas)

📊 Data

Transactions of the Association for Computational Linguistics (TACL) 2018

|

|

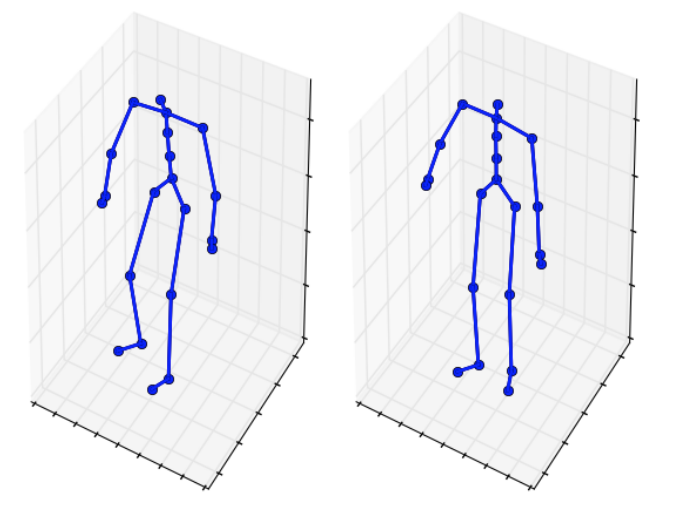

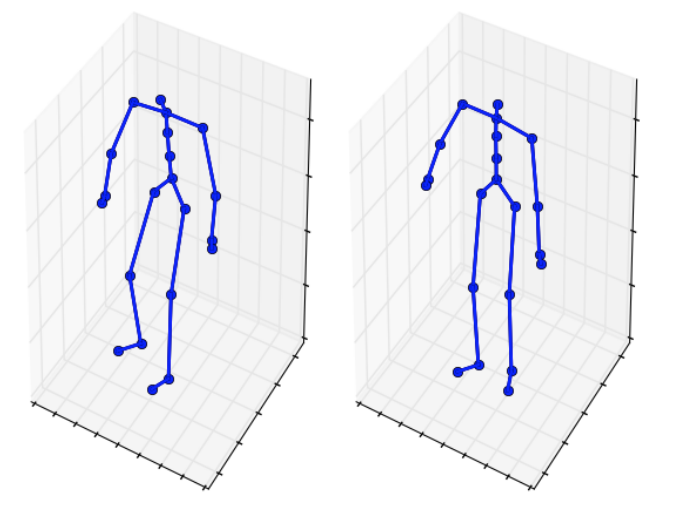

A Recurrent Variational Autoencoder for Human Motion Synthesis

Ikhsanul Habibie, Daniel Holden, Jonathan Richard Schwarz, Joe Yearsley, Taku Komura

💻 Code

📊 Data

British Machine Vision Conference (BMVC) 2017

|

Academic Workshops

Based on Jon Barron's website.

| |